Operyn helps teams quickly understand AI visibility and know where to focus without guessing.

See how AI answers describe your brand, which sources they rely on, and how competitors appear, all in one clear view.

Making sense of AI visibility

AI answers don’t work like search results — there are no rankings or volume metrics to rely on.

Visibility in AI is about how answers describe brands and which sources they trust.

Because of this, understanding AI visibility starts with the answers themselves.

Operyn is built to help teams see this clearly and get oriented faster.

Below are the core ways teams use Operyn to understand AI visibility.

How AI understands your brand

Features included

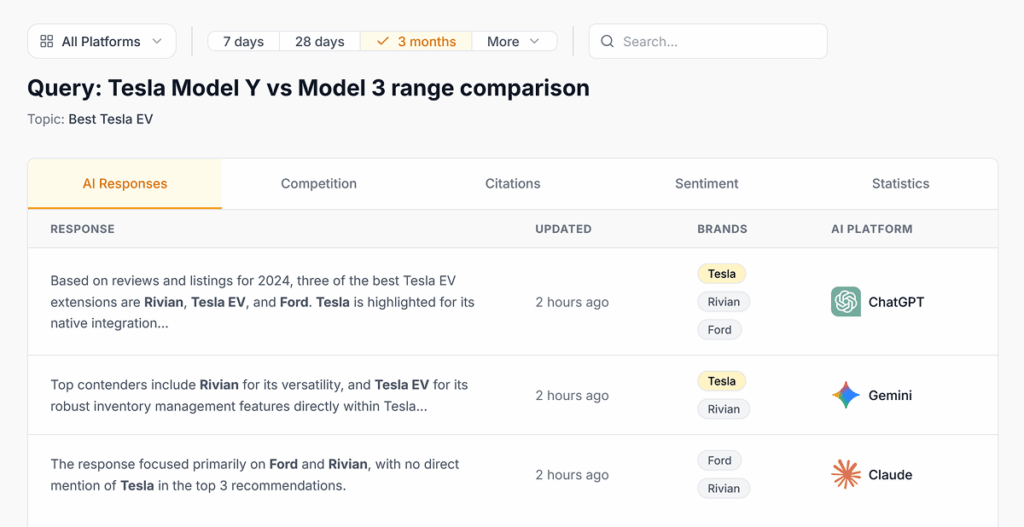

Full AI answers for brand-related prompts

Operyn captures the complete answers generated by AI tools when questions relate to your brand, products, or category. This lets teams see the actual language AI uses, not summaries or inferred signals.

Brand mentions and descriptions inside AI answers

Within each answer, Operyn highlights where and how your brand appears — whether it’s mentioned directly, referenced indirectly, or omitted entirely.

Sources cited alongside those answers

Operyn shows which sources AI relies on when forming answers about your brand, providing context on where the information comes from.

What this helps you understand

How AI describes your brand in natural language

You can see how AI explains your brand to users — what it emphasizes, how it positions you, and which attributes or use cases are associated with your name.

Whether your brand is represented positively, neutrally, or not at all

Instead of guessing based on traffic or rankings, teams can directly assess presence and tone inside AI answers.

What information AI uses when talking about your brand

By reviewing cited sources, teams can understand which content shapes AI’s understanding and which sources carry the most influence.

Why this matters

AI answers increasingly shape first impressions

For many users, AI answers are becoming the first place they learn about a brand or category.

Brand visibility in AI is about representation, not rankings

There is no position to track — visibility is defined by how and whether your brand appears in answers.

Without seeing the actual answers, teams are guessing

Relying on indirect signals makes it difficult to understand how AI truly represents your brand. Seeing the answers removes that uncertainty.

How AI answers evolve over time

Features included

AI answers captured across multiple runs

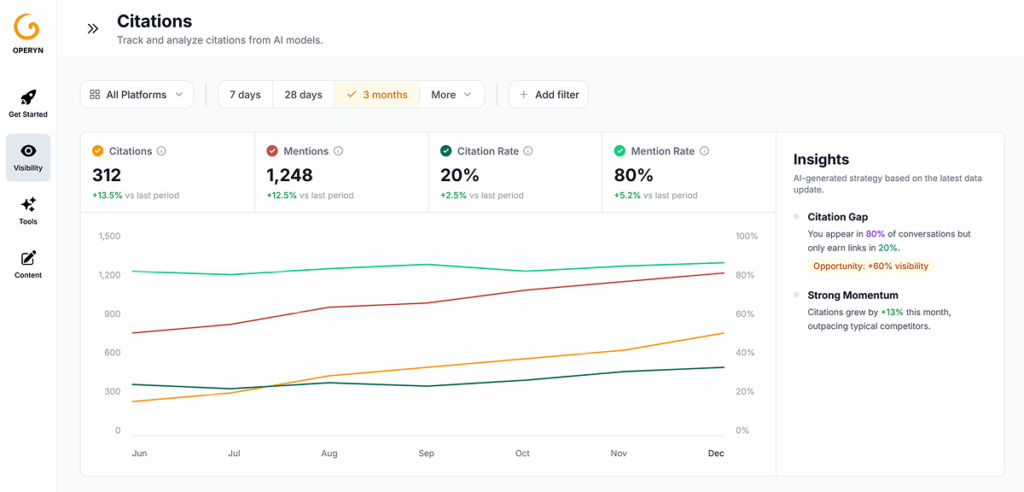

Operyn records AI answers for the same prompts over time, allowing teams to review how responses change across different moments rather than relying on a single snapshot.

Citation lists associated with each run

For every captured answer, Operyn keeps track of the sources cited at that point in time, so changes in trust or authority are visible.

Brand and competitor presence over time

Operyn shows how often your brand and competitors appear in AI answers across runs, making shifts in visibility easier to spot.

What this helps you understand

Whether AI answers are stable or changing

Teams can see if answers remain consistent or fluctuate, helping distinguish meaningful change from short-term variation.

What exactly changed when visibility shifts happen

Instead of reacting to a vague sense of change, teams can review whether differences come from wording, cited sources, or brand presence.

How changes affect brands and competitors differently

By comparing presence over time, teams can understand whether changes impact the whole category or specific brands.

Why this matters

AI answers can change without notice

AI systems update frequently, and answers may shift even when your own content hasn’t changed.

Reacting without understanding leads to misguided decisions

Without context, teams risk responding to noise rather than meaningful change.

Tracking evolution builds confidence before taking action

Seeing how answers evolve over time helps teams move from uncertainty to informed discussion and next steps.

Your category and competitive landscape in AI

Features included

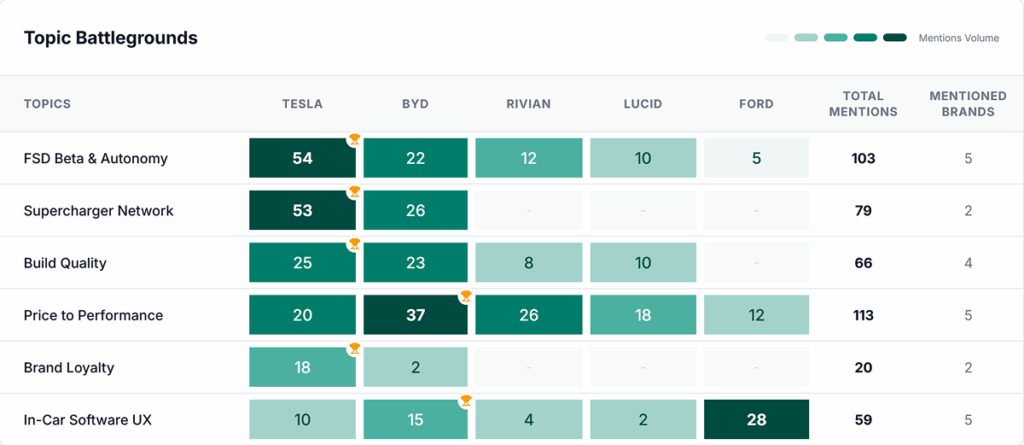

Category-level AI answers related to your brand

Operyn surfaces AI answers that relate to your broader category, not just your brand name. This helps teams see how AI frames the space they operate in.

Direct and indirect competitors appearing in AI answers

Within those category-level answers, Operyn shows which other brands appear alongside yours — including both obvious competitors and less expected alternatives.

Competitor list generated by the system and adjustable manually

Operyn identifies competitors based on AI answers, while still allowing teams to add or refine the list manually to reflect real-world context.

What this helps you understand

You can see how AI defines the competitive set around your brand, which may differ from traditional SEO or internal assumptions.

Who you are effectively competing with in AI answers

By reviewing which brands appear in the same answers, teams can understand where attention is shared and where differentiation matters.

Whether visibility changes are competitive or category-wide

Seeing multiple brands together helps distinguish between broader category shifts and changes affecting specific competitors.

Why this matters

AI competition does not follow traditional SEO rules

Brands may appear in AI answers regardless of search rankings or keyword ownership.

The AI-defined competitive set can be unexpected

Indirect or emerging competitors may influence visibility even if they’re not tracked in existing SEO tools.

Understanding the landscape prevents misalignment and wasted effort

Clear context helps teams avoid reacting to the wrong signals and focus on what actually affects brand representation in AI answers.

Where to focus attention right now

Features included

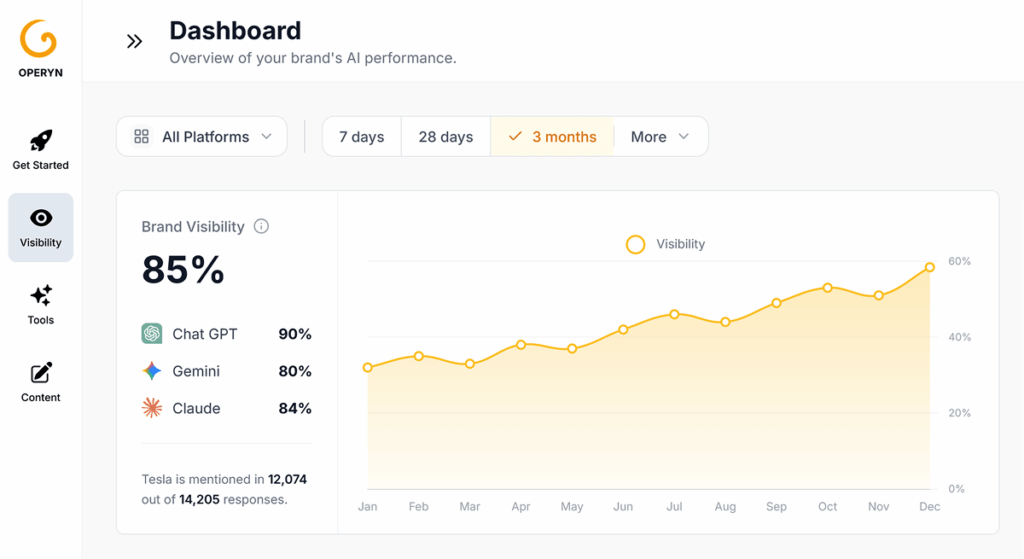

Dashboard views aggregating answers, citations, and competitors

Operyn brings together AI answers, cited sources, and competitor presence into a single view, so teams don’t need to review each signal in isolation.

Summary-level views across prompts, categories, and time

High-level summaries make it easier to see patterns across multiple prompts, categories, and time periods without diving into every individual answer.

What this helps you understand

Where changes are happening across your AI visibility

Teams can quickly see which areas are stable and which are changing, helping them decide where deeper review is needed.

Which areas deserve closer review versus monitoring

Not every shift requires action. Clear summaries help distinguish between signals worth investigating and those that can simply be observed.

How different signals connect at a high level

By viewing answers, sources, and competitors together, teams can understand relationships between changes instead of treating each signal separately.

Why this matters

Teams can’t deeply analyze everything all the time

AI visibility produces a growing amount of information, and reviewing everything in detail is not realistic.

Clear overviews reduce overwhelm and shorten time to insight

When information is organized and connected, teams spend less time figuring out what’s happening.

Better focus leads to faster, more confident next steps

Knowing where to look first makes it easier for teams to discuss, prioritize, and decide what to do next.

Who this is for

Teams adapting to AI answers alongside SEO

You’re already working with SEO or content, but starting to notice that AI answers are changing how people discover information. Rankings and keywords no longer explain everything, and you need a clearer way to understand what AI is actually saying.

Teams responsible for brand visibility and perception

You care about how your brand shows up to users, but don’t yet have a clear view of how AI represents it. You need to understand brand presence and context beyond traditional performance dashboards.

Agencies and consultants exploring AEO for clients

Your clients are asking about AI visibility, but existing tools don’t clearly explain AI answers. You need a structured way to review, discuss, and communicate how brands appear in AI-driven results.